What is Crawling in SEO?

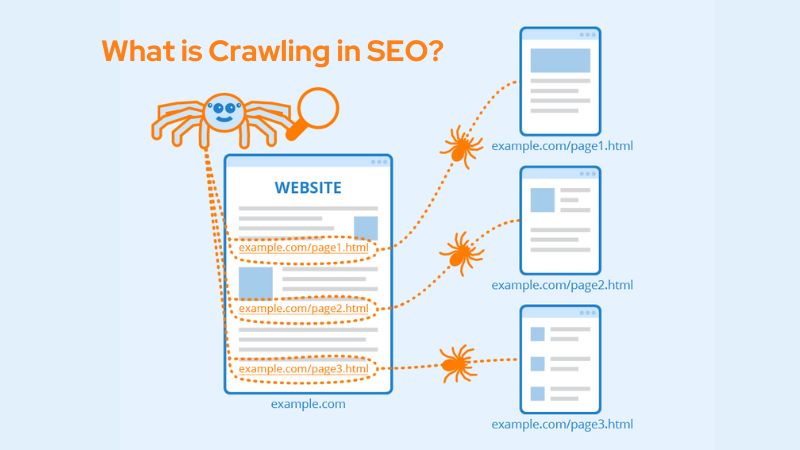

Crawling and indexing pages is an integral component of SEO. The process is performed by computer programs called web bots or search engine crawlers that scan websites to collect information that they save in a database.

Crawling and indexing allow search engines to provide searchers with fresh and relevant content, reflecting it in their results. One way of improving crawling is ensuring that pages are organized logically.

Crawling

Crawling is the practice by which search engine robots (also referred to as crawlers, bots or spiders) discover new web pages. It involves various steps such as fetching a web page and extracting its content for storage into databases; this process allows search engines to quickly retrieve pertinent results when users search using particular terms or queries.

Indexing requires significant computing power. It records all the words it finds on web pages it fetches and employs complex algorithms to assess whether each page meets specific queries – this enables search engines to deliver relevant results at the top of SERPs (Search Engine Results Pages).

For faster indexing of pages, the URL inspection tool can help. It will indicate whether a particular page has already been indexed; if not, make sure that request to indexing it as soon as possible.

Increase indexation speeds by building backlinks from authoritative domains and updating content on pages that haven’t been updated in some time. Also ensure your server can handle the load created by Googlebot by checking its host status in Google Search Console as green and server response times being below a specific threshold.

Indexing

Search engines index websites they crawl and index in order to quickly retrieve content when users perform related searches on them, while simultaneously making sure it relates back to SEO keywords you are targeting in SEO campaigns. When they crawl your website and index its pages, search engines add them into a database of discovered URLs so they can be quickly found by searchers when relevant users conduct related searches on it. This also ensures your SEO campaigns remain effective!

Crawling and indexing websites can take time. How long this takes depends on a number of factors, including site size and frequency of content updates; additionally, load speed affects how often and quickly Google crawls and indexes websites; high load times or timeouts can drastically decrease SEO performance for any given site.

To increase the crawlability and indexability of your site, consider these strategies:

On-page optimization

On-page optimization seeks to increase crawlability of a site, by linking pages logically and making sure the hierarchy of content is clear. Furthermore, this process must include making sure internal links point towards relevant material as well as that page titles contain keywords-rich descriptions for improved SEO purposes and user experience. Finally, on-page optimization involves speed optimization since slow websites impede visitor experience and reduce conversions.

Search engines employ a sophisticated set of computers to search billions of pages across the Internet. Programs known as “crawlers” or “bots” help them fetch these pages and comprehend their contents; any useful material will then be indexed and displayed as search results for relevant queries.

Optimization of website crawlability involves various strategies, such as creating and submitting a sitemap to Google Search Console or Bing Webmaster Tools and tracking page indexing; looking out for exclusions in their pages not indexed report as this may indicate problems with on-page optimization of your site.

One way to expand your crawl budget is to limit duplicate content on your site. Duplicate pages divert crawlers away from more critical pages and can cause index bloat; canonical tags and creating a hierarchical link structure can help address this problem.

Off-page optimization

Search engines rely on web pages and other sources to collect information for search queries, known as indexing. As part of SEO practices, this process involves collecting web page details into a database which then becomes retrievable when users conduct searches; thus allowing search engines to deliver relevant results quickly and effectively.

Accelerating Googlebot crawling speed on your website is an essential off-page optimization strategy, helping ensure new content reaches audiences as soon as possible and testing out on-page SEO changes. Submitting sitemaps through Google Search Console is the ideal way to do this; but remember not to oversubmit, which could reduce crawl efficacy.

An effective off-page optimization strategy is encouraging brand mentions. Doing this helps establish credibility online and shows search engines that your organization deserves to be discovered by searching the public. There are various strategies for doing this such as seeking resource pages with links to other websites and asking them to include yours or even studying competitors’ backlink profiles for possible opportunities to link from them.